Executive Summary:

This research, conducted under the Spring 2025 Pennington Undergraduate Research Award (PURA), explores the development of efficient transformers, essential for sequence modeling tasks. Sequence modeling is a foundational component of machine learning research applicable to many fields, ranging from natural language processing to time series forecasting and image analysis. While traditional models like recurrent neural networks (RNNs) and their improved successors (LSTM, GRU) enabled deep learning models to incorporate contextual data, they suffered from limited scalability due to the lack of parallelization capabilities. This paradigm shifted in 2017 when transformer architectures were introduced, enabling parallel training and state-of-the-art performance across diverse domains. However, their quadratic time complexity has spurred interest in developing more computationally efficient alternatives.

In this project, we focused on proposing novel sequence modeling architectures with reduced computational complexity, while preserving or improving modeling accuracy. We developed mathematically principled models and validated them through extensive testing on downstream tasks, including Long Range Arena for general sequence tasks, WikiText for language modeling, and SC10 for speech classification. Such downstream tasks are closely tied to real-life applications, ensuring the high applicability of the created models.

Among the models evaluated, SAMoVAR stood out by achieving state-of-the-art performance in time series analysis tasks. By integrating autoregressive structures with efficient transformer design principles, SAMoVAR consistently outperformed competitive baselines on multivariate datasets such as ETT, traffic, solar, and electricity.

The findings of this research highlight promising directions for building scalable and effective sequence models, balancing performance and efficiency. Future work will extend this methodology to new architectures and applications, with the aim of publishing impactful results and advancing practical AI deployment.

Research Motivation:

Throughout the history of AI and machine learning, sequence modeling has been a topic of high interest. Starting in the 1980s, recurrent neural networks kickstarted the development of deep learning methods specialized for sequence modeling. Using a hidden state that carried information from the previous data points, recurrent neural network models were able to account for context, although drawbacks, including gradient vanishing and gradient explosion, were apparent. Subsequent research improved the performance of such recurrent neural networks, creating models like LSTM and GRU, which included gating mechanisms, improving the model’s performance. Although this mostly solved the gradient explosion and vanishing problem of RNNs, a big roadblock was still present in the fact that the training process wasn’t parallelizable, meaning that training these models was a very time and resource-consuming task, and scaling was pretty much impossible.

Beginning in 2017, the field of sequence modeling was revolutionized through the invention of transformers. By training query, key, and value for each timestep, transformers were able to learn detailed contextual dependencies. Most importantly, transformers could be trained in parallel without having to go step by step in the temporal direction, enabling the training of extremely large models. This revolutionary breakthrough led to a boom in the AI industry, which can be felt in everyday life through large language models like ChatGPT.

Despite the upsides highlighted in the paragraph above, transformers are still criticized for their need for computing power, with larger models requiring the extensive use of countless GPUs for training. The high computational costs of transformers are caused by the fact that their training scales in quadratic time (O(n^2)), and to address this issue, researchers have been developing sequence modeling architectures with lower time complexities. Linear transformers started the development of the field by suggesting a linear alternative to transformers, which was done through the elimination of the sigmoid activation function in context calculation. Researchers have since been creating different efficient sequence modeling structures, including efficient transformers and state-of-the-art models, which include models like S4 and Mamba, attempting to reach the perfect balance between efficiency and performance. Through my research with the Pennington Undergraduate Research Award, I aim to contribute to the advancement of state-of-the-art AI architectures, particularly in improving the efficiency of sequence modeling models.

Research Objective:

The research objectives of the Spring 2025 PURA award period were the following.

- Propose novel sequence modeling architectures capable of effectively modeling sequential data with lower computational complexity. The effectiveness of the proposed models should be backed up mathematically.

- Verify the performance of the developed models by performing the following downstream tasks.

- Natural Language Processing

- Time Series Analysis

- Image Analysis

- Speech Classification

- Etc.

Research Methodology:

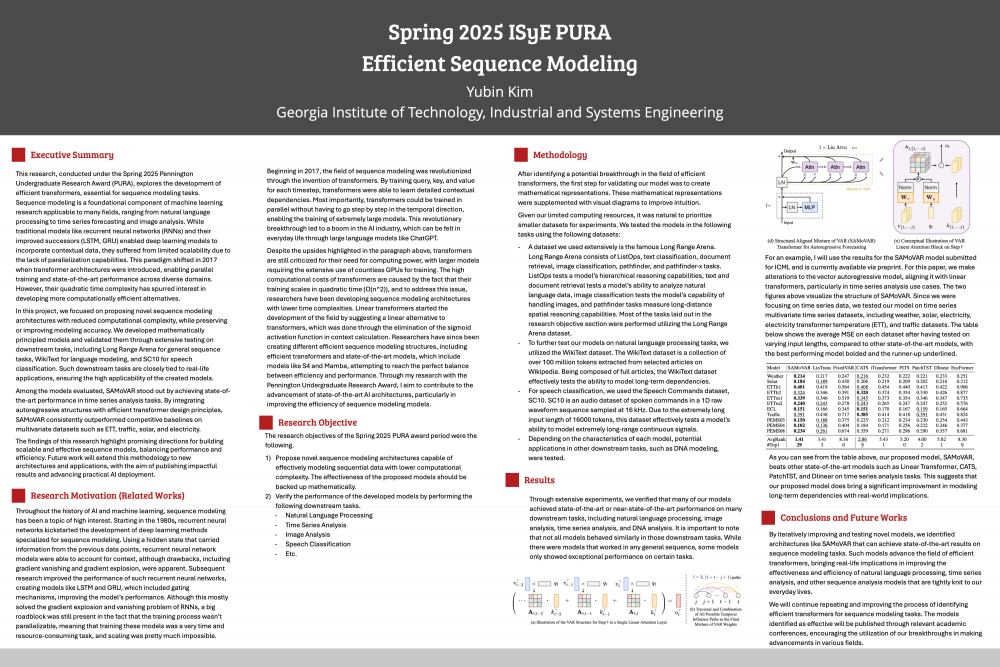

After identifying a potential breakthrough in the field of efficient transformers, the first step for validating our model was to create mathematical representations. These mathematical representations were supplemented with visual diagrams to improve intuition.

Given our limited computing resources, it was natural to prioritize smaller datasets for experiments. We tested the models in the following tasks using the following datasets:

- A dataset we used extensively is the famous Long Range Arena. Long Range Arena consists of ListOps, text classification, document retrieval, image classification, pathfinder, and pathfinder-x tasks. ListOps tests a model’s hierarchical reasoning capabilities, text and document retrieval tests a model’s ability to analyze natural language data, image classification tests the model’s capability of handling images, and pathfinder tasks measure long-distance spatial reasoning capabilities. Most of the tasks laid out in the research objective section were performed utilizing the Long Range Arena dataset.

- To further test our models on natural language processing tasks, we utilized the WikiText dataset. The WikiText dataset is a collection of over 100 million tokens extracted from selected articles on Wikipedia. Being composed of full articles, the WikiText dataset effectively tests the ability to model long-term dependencies.

- For speech classification, we used the Speech Commands dataset, SC10. SC10 is an audio dataset of spoken commands in a 1D raw waveform sequence sampled at 16 kHz. Due to the extremely long input length of 16000 tokens, this dataset effectively tests a model’s ability to model extremely long-range continuous signals.

- Depending on the characteristics of each model, potential applications in other downstream tasks, such as DNA modeling, were tested.

Results:

Through extensive experiments, we verified that many of our models achieved state-of-the-art or near-state-of-the-art performance on many downstream tasks, including natural language processing, image analysis, time series analysis, and DNA analysis. It is important to note that not all models behaved similarly in those downstream tasks. While there were models that worked in any general sequence, some models only showed exceptional performance on certain tasks.

For an example, I will use the results for the SAMoVAR model submitted for ICML and is currently available via preprint. For this paper, we make alterations to the vector autoregressive model, aligning it with linear transformers, particularly in time series analysis use cases. Since we were focusing on time series data, we tested our model on time series multivariate time series datasets, including weather, solar, electricity, electricity transformer temperature (ETT), and traffic datasets. The table attached in both the report and the poster shows the average MSE on each dataset after having tested on varying input lengths, compared to other state-of-the-art models, with the best-performing model bolded and the runner-up underlined. The table shows that our proposed model, SAMoVAR, beats other state-of-the-art models such as Linear Transformer, CATS, PatchTST, and Dlinear on time series analysis tasks. This suggests that our proposed model does bring a significant improvement in modeling long-term dependencies with real-world implications.

Conclusions and Future Work:

By iteratively improving and testing novel models, we identified architectures like SAMoVAR that can achieve state-of-the-art results on sequence modeling tasks. Such models advance the field of efficient transformers, bringing real-life implications in improving the effectiveness and efficiency of natural language processing, time series analysis, and other sequence analysis models that are tightly knit to our everyday lives.

We will continue repeating and improving the process of identifying efficient transformers for sequence modeling tasks. The models identified as effective will be published through relevant academic conferences, encouraging the utilization of our breakthroughs in making advancements in various fields.